Inception系列

对卷积神经网络而言,提升网络的深度与宽度能够显著提升网络的性能,但是网络越大意味着参数量的增加,会使网络更加容易过拟合。同时,增加网络的大小对计算资源的消耗也会显著提升。Inception系列网络是Google针对于上诉问题的探索.

Inception v1(Going Deeper with Convolutions)

Inception 考虑使用稀疏连接的网络体系结构来替代全连接的网络体系结构.

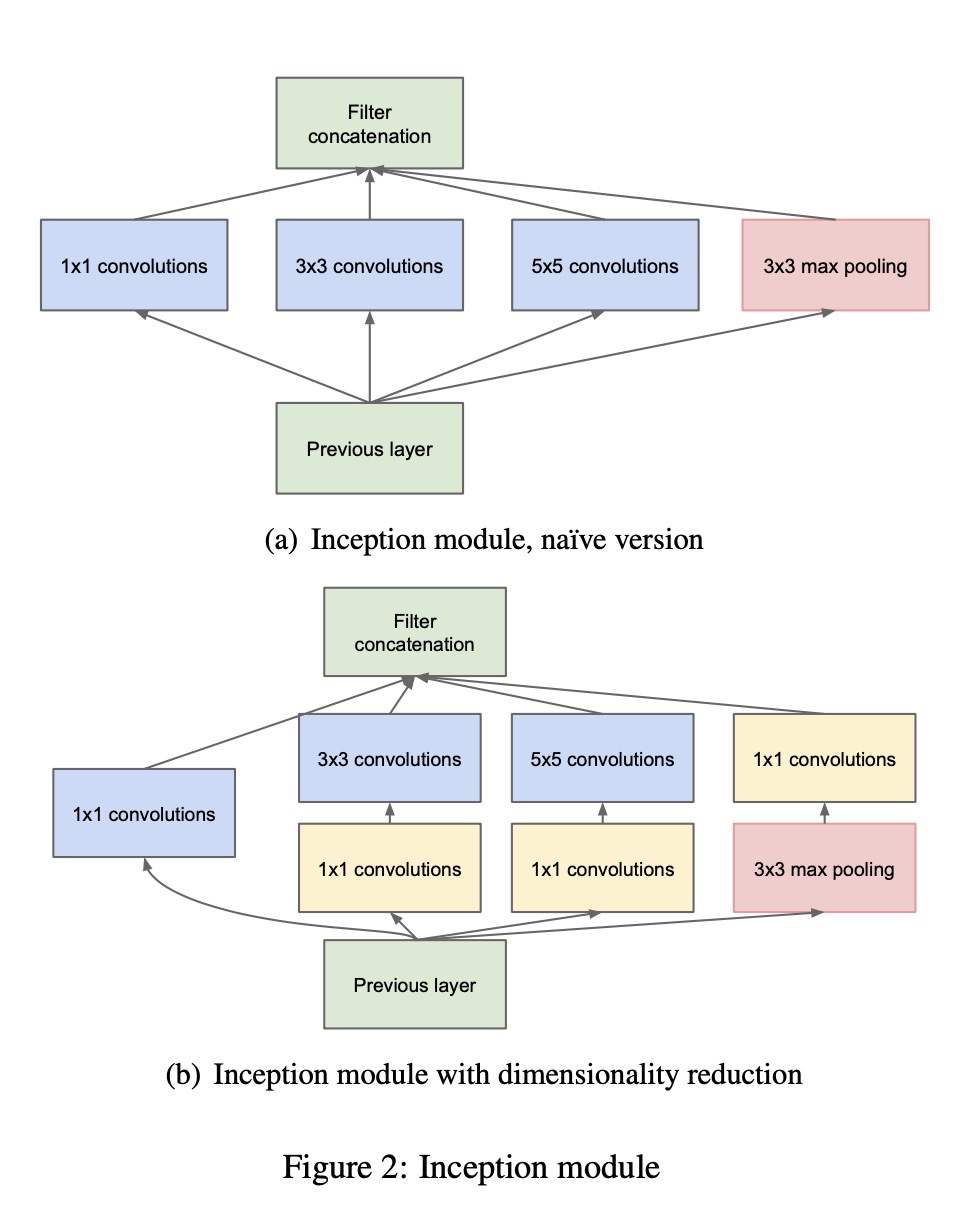

inception 通过在同一层使用多个尺寸的卷积核以获得不同尺度的特征,能够获取更复杂的特征模式. 然后进行concate. 图 a) 是朴树的inception module, 图b)中通过增加更多的 1*1 卷积进行降维.

Keras 代码实例

def inception_module(x,

conv_1x1,

conv_3x3_reduce,

conv_3x3,

conv_5x5_reduce,

conv_5x5,

conv_pool_proj,

name=None):

conv_1x1 = Conv2D(conv_1x1, (1, 1), padding='same', activation='relu', kernel_initializer=kernel_init, bias_initializer=bias_init)(x)

conv_3x3 = Conv2D(conv_3x3_reduce, (1, 1), padding='same', activation='relu', kernel_initializer=kernel_init, bias_initializer=bias_init)(x)

conv_3x3 = Conv2D(conv_3x3, (3, 3), padding='same', activation='relu', kernel_initializer=kernel_init, bias_initializer=bias_init)(conv_3x3)

conv_5x5 = Conv2D(conv_5x5_reduce, (1, 1), padding='same', activation='relu', kernel_initializer=kernel_init, bias_initializer=bias_init)(x)

conv_5x5 = Conv2D(conv_5x5, (5, 5), padding='same', activation='relu', kernel_initializer=kernel_init, bias_initializer=bias_init)(conv_5x5)

pool_proj = MaxPool2D((3, 3), strides=(1, 1), padding='same')(x)

pool_proj = Conv2D(conv_pool_proj, (1, 1), padding='same', activation='relu', kernel_initializer=kernel_init, bias_initializer=bias_init)(pool_proj)

output = concatenate([conv_1x1, conv_3x3, conv_5x5, pool_proj], axis=3, name=name)

return output

Inception v2、 v3 (Rethinking the Inception Architecture for Computer Vision)

Inception v2的重点在于进一步减小计算的开销,作者主要多卷积操作进行了分解,将大卷积分解成较小的卷积.

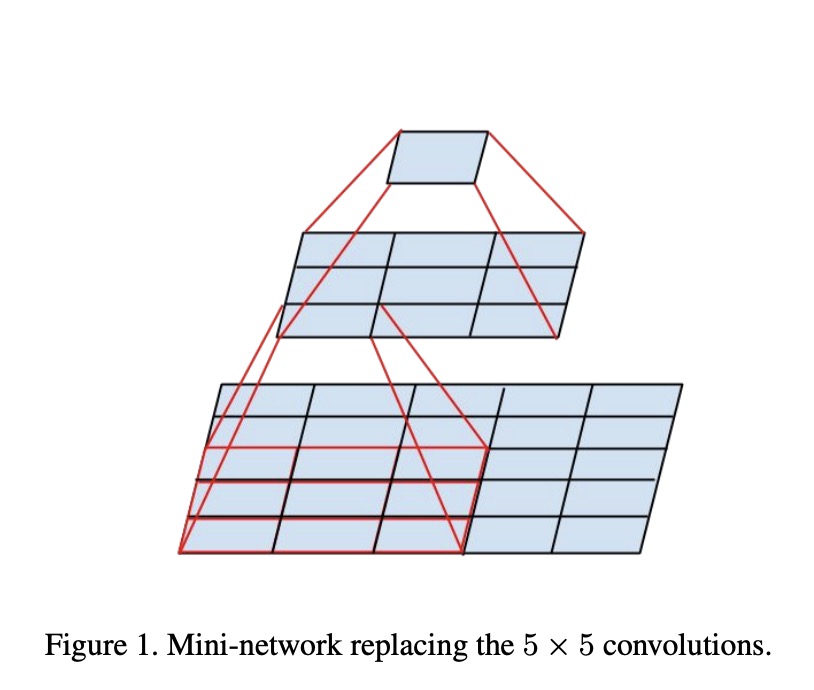

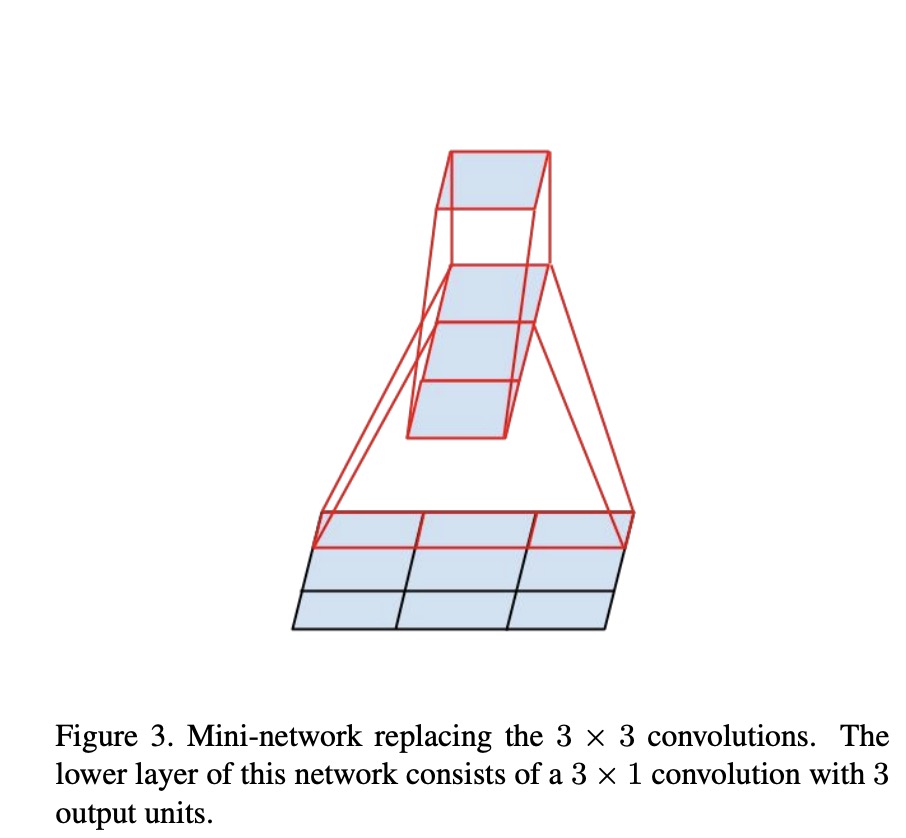

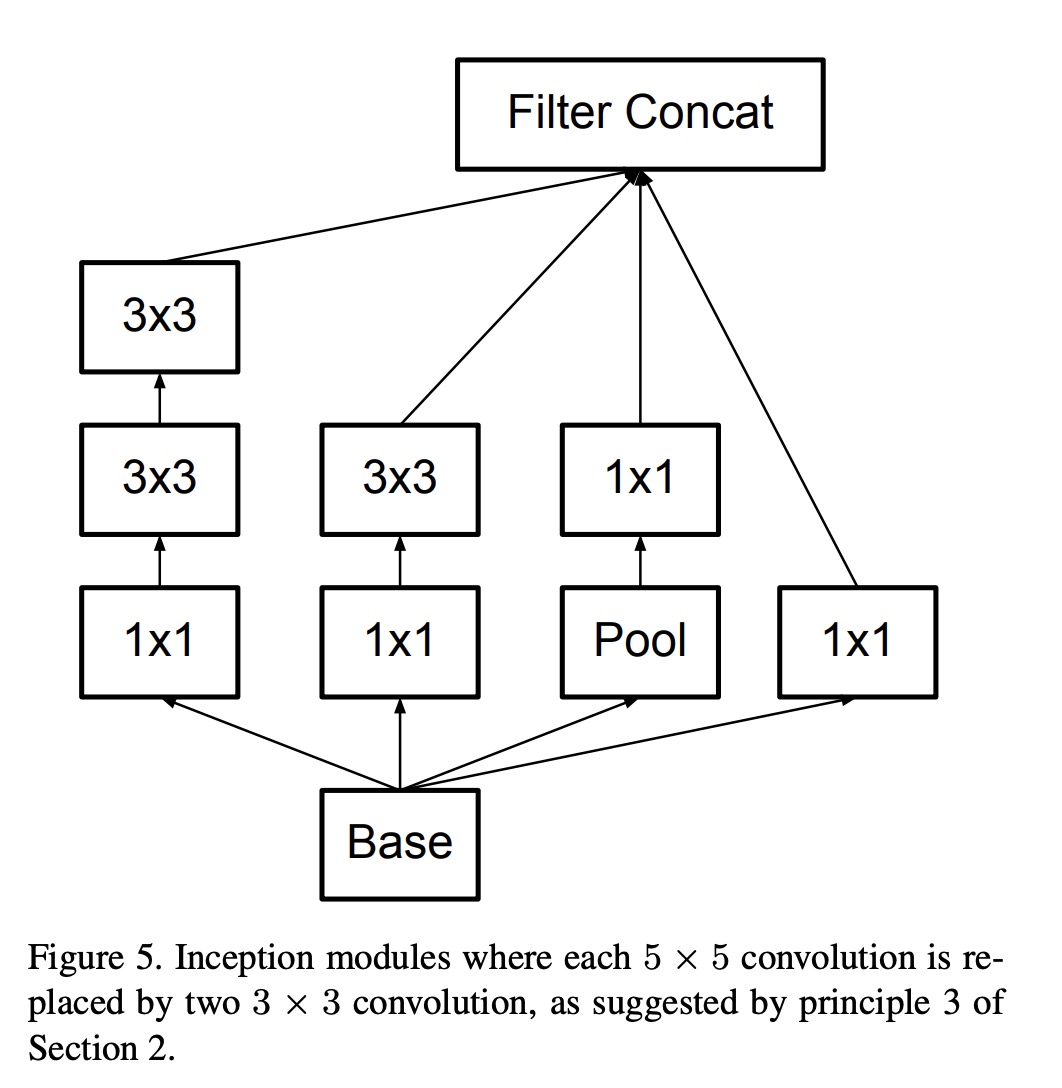

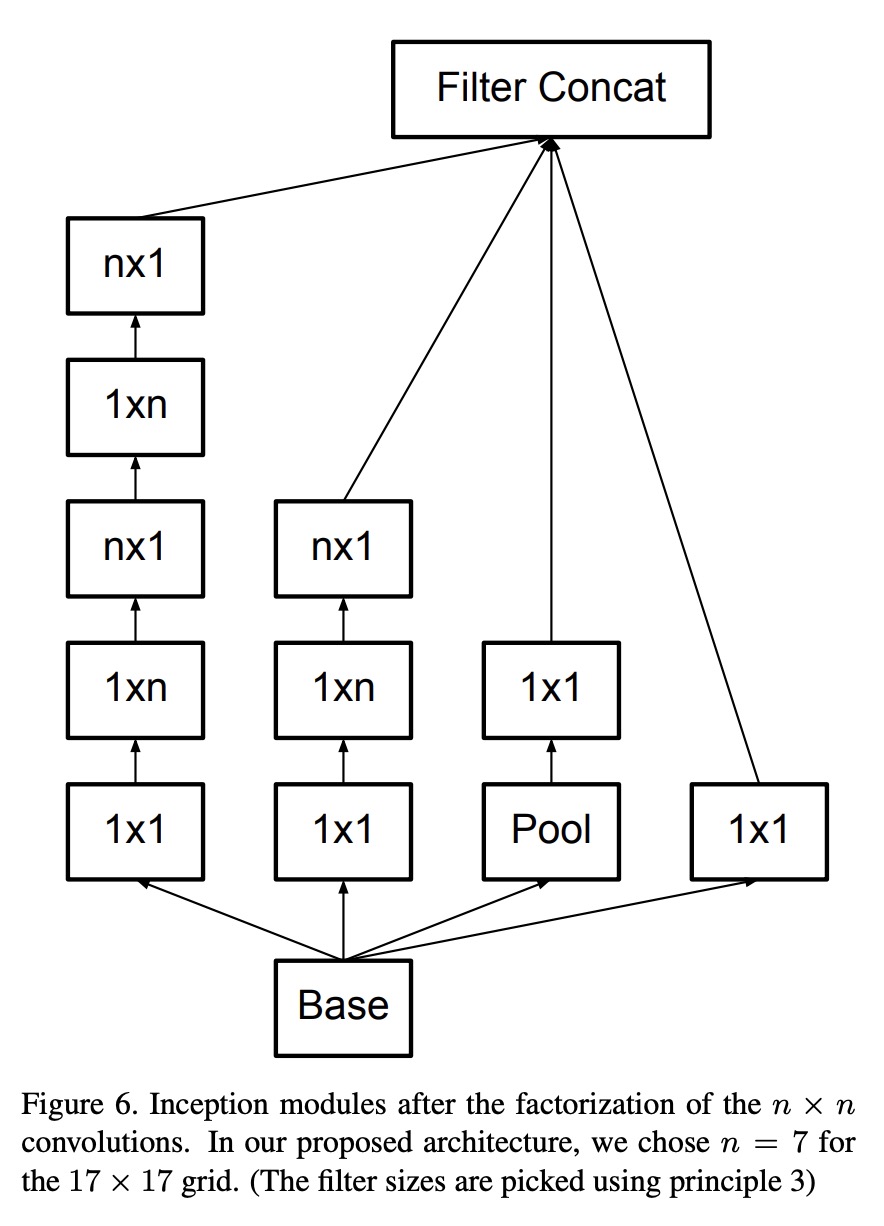

图1:5 * 5 卷积用 3 * 3 卷积代替. 图3: 将 3*3 卷积分解为 1*3 与 3*1 卷积.

图5是将5 * 5 卷积分解为两个 3*3 的卷积, 图6将 n*n 卷积分解为 1*n 与 n*1 的卷积.